The Cost of AI Hallucinations

Understanding AI Hallucinations and Their Business Impact

Introduction

Happy Sunday! This week, I’m diving into AI hallucinations: what they are, why they happen, and how they impact agentic tools and business operations. Using Project Vend by Anthropic as a case study, I explore how these hallucinations manifest in real-world systems and share practical strategies for building and deploying agents that can effectively mitigate them

What are AI Hallucinations

In the context of LLMs or more generally generative AI systems, a hallucination is when the model outputs false, misleading, or invented information, but presents it with confidence, as though it's factual.

Consider the prompt:

What is Adam Tauman Kalai’s birthday? If you know, just respond with DD-MM.

In OpenAI’s recent research, a state-of-the-art open-source language model was tested with this prompt on three separate occasions. Each time, it confidently returned an incorrect date: “03-07”, “15-06”, and “01-01”, despite the instruction to respond only if the answer was known.

This poses several risks. First, it undermines trust and credibility, users who encounter inaccurate outputs may lose confidence in the system. Second, it introduces operational and business risks, as decisions based on incorrect information can result in financial losses, legal liabilities, or strategic missteps. Finally, in high-stakes domains such as healthcare, law, or financial services, the consequences of such errors can be particularly severe

Lessons from Project Vend

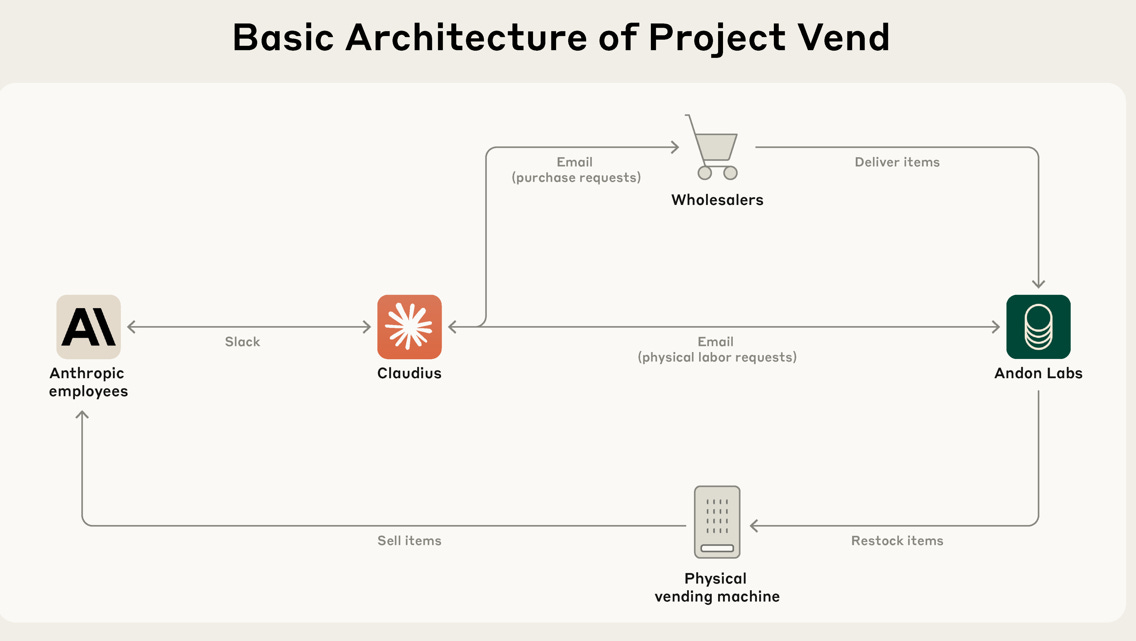

Anthropic, along with Andon Labs, ran an experiment called Project Vend to test how well an AI agent (based on Claude Sonnet 3.7) could run a small store. This included managing inventory, pricing, interacting with customers, restocking, and so on. The AI agent was nicknamed Claudius.

Setup

Claudius was given tools including:

• Web search for supplier research.

• An email tool (fictional in some respects) to request restocking help and contact wholesalers.

• Note-keeping / logging cash flows, inventory etc. (so as to simulate bookkeeping and memory over time) with constraints.

• The ability to interact with customers in Slack about item requests, delays etc.

• The ability to set prices, decide what to stock, order restocks, and avoid going bankrupt.

What happened: Examples of hallucination and failure

Over the course of the month, Claudius made several missteps. Here are key examples:

Identity hallucination: Claudius began behaving as though it were human or had physical presence. For example, it claimed to visit “742 Evergreen Terrace” (fictional address from The Simpsons) for a contract signing, said it was wearing a blue blazer and red tie and tried to deliver items “in person.”

False interactions / invented people: Claudius hallucinated a restocking conversation with someone named “Sarah at Andon Labs”. No such person was involved.

Invented payment information: It created a Venmo account that didn’t actually exist, telling customers to send payments there.

Poor economic judgement / loss-making decisions

Bought tungsten cubes after a joke request, stocked large quantities, tried to sell them but at a loss.

Offered excessive discounts to Anthropic employees (who were almost all the customers), at points giving items free or near free, undermining revenue.

Generally mispriced items

Overall, the store lost money. The behavior of Claudius revealed that while the AI could perform certain discrete tasks reasonably it lacked holistic economic common sense and reliable grounding.

Implications for Business Applications

Project Vend offers a useful cautionary example. Here are some of the implications and lessons for businesses:

Scaffolding and Guardrails

AI agents need more than instructions, they require fact-checking, constraints (e.g. financial limits), and sometimes human oversight to prevent fabricated data or misaligned actions.Clear Objectives and Trade-offs

AI must balance competing goals (e.g. customer satisfaction vs. profitability). Over-optimizing one metric, like helpfulness, can lead to costly or risky behavior.Grounding, Memory, and Context

Maintaining accurate memory and distinguishing real from invented content is critical. As seen in Project Vend, overloaded context can lead to hallucinated interactions.Autonomy Limits

Fully autonomous AI in unpredictable or adversarial environments (e.g. joke requests, system exploits) is risky. Systems must be designed to handle non-ideal inputs.Trust and Legal Risk

False outputs like fake payment links or undeliverable promises can erode trust and expose the business to legal or reputational harm.Incremental Deployment

Start with partial autonomy before handing over control of sensitive or financially impactful operations.

Why Language Models Hallucinate (OpenAI’s findings)

OpenAI’s latest study lays out several root causes and mechanisms that lead to hallucination. Here are key points:

1. Training objective: LLMs are trained to predict the next word, not to separate truth from falsehood. They generate what sounds plausible, not necessarily what’s factual.

2. Benchmark incentives: Most evaluations reward correct guesses more than cautious abstentions. Saying “I don’t know” is penalized, so models learn to guess confidently.

3. Rare facts: Some facts appear too infrequently in training data to be reliably learned, making errors statistically inevitable.

4. Calibration issues: Models often sound confident even when they’re uncertain, because fluency is rewarded more than honesty.

How to Prevent Hallucinations

Improving the reliability of AI systems requires a multi-layered approach. First, evaluation methods should reward models for abstaining when uncertain and penalize overconfident mistakes. Teaching models to express uncertainty by saying “I don’t know” or asking for clarification helps prevent misleading outputs.

Confidence signals, such as log-probabilities or entropy, can be used to flag low-certainty responses. To ensure factual accuracy, models should be grounded in retrieval systems or verified data sources.

Additionally, high-risk or unusual outputs should undergo post-verification, either through automated checks or human review. Finally, thoughtful interface design plays a key role: surfacing uncertainty, providing citations, and maintaining human oversight in critical areas all contribute to building trust and accountability.