Advancing KV Cache Optimization

Deep Dive into Standard Techniques and Ultra-Low Precision Quantization

Balancing Long Context Reasoning and Memory Efficiency

AI models have significantly improved their ability to handle long sequences, largely due to the attention mechanism in transformers. This mechanism stores input tokens as key-value (KV) pairs and assigns a relevance score to each token when generating output (query). By doing so, the model effectively filters out irrelevant information and processes data over long sequences more efficiently.

A key component of this process is the KV cache, which stores previously computed key and value tensors from attention layers. Instead of recomputing them for each new token, the model reuses these cached values, significantly reducing computation time. While this mechanism enables long-context reasoning in LLMs, it also comes with a major challenge—high resource consumption during inference. Running inference on long contexts requires substantial GPU memory, and for larger LLMs, a multi-GPU setup is often necessary.

Recent research has shown that for small batch sizes, generative inference in LLMs is memory-bound, meaning the main computational bottleneck is memory access rather than raw processing power. The primary limitation when handling long sequences is the memory required to store KV activations throughout inference. To address this, optimizing KV cache storage is crucial. Developing methods to compress the KV cache can enable more efficient long-sequence inference, reducing memory constraints while maintaining model performance.

Optimizing KV Cache: Techniques for Memory-Efficient Inference

At the core of optimizing KV Cache lies the principle of reducing memory consumption, which can be achieved by compressing 'K' (Keys) or 'V' (Values) in the KV pairs. Techniques to compress these components directly impact the efficiency of models, particularly in terms of memory usage and processing speed.

Token-Level Optimizations

KV Cache Selection: This strategy involves identifying and retaining only the most relevant KV pairs to reduce memory usage without compromising model performance.

Merging: Combining similar KV pairs can minimize redundancy, leading to more efficient memory utilization. By merging KV pairs that share similarities, the model reduces the storage requirements, streamlining the inference process.

Quantization: Technique used to reduce the memory and computational requirements of neural networks by converting continuous values into discrete levels. When applied to the KV (key-value) cache, quantization maps the tensor values (which represent the keys and values in the cache) to a smaller set of discrete levels with lower precision.

This process effectively compresses the data, reducing the amount of memory needed to store the KV cache and speeding up computations.

For example, instead of using 32-bit floating-point numbers to represent the values, quantization might use 8-bit integers. This reduction in precision can significantly decrease the memory footprint and improve the efficiency of the model, especially during inference.

There are two primary types:

i) Full Quantization: This method compresses both the model weights and the KV Cache, reducing the overall memory footprint of the entire model. Full quantization can significantly decrease the storage and computational requirements. However, it may also introduce quantization errors that can affect model performance.

ii) KV Cache-only Quantization: This approach specifically targets the KV Cache activations, selectively compressing them to conserve memory while leaving the model weights in their original state. Techniques such as per-channel quantization, sensitivity-weighted datatypes, and outlier isolation can be employed to enhance the precision and effectiveness of KV Cache quantization.

Low-Rank Decomposition: Approximating KV matrices with lower-rank representations can significantly cut down memory consumption.

Model-Level Optimizations

Architectural Innovations: Modifying the model's architecture to naturally promote KV reuse can lead to inherent efficiency improvements.

Key methods include:

i) Chunk Attention : Reuses KV Cache between dialogues by establishing a dictionary tree, speeding up the pre-fill stage and optimizing GPU memory usage.

ii) Paged Attention : Uses a mapping table to store KV Cache in discontinuous GPU memory, reducing fragmentation and improving inference efficiency.

Attention Mechanisms: Enhancing attention mechanisms to better utilize existing KV pairs can reduce the need for redundant computations. Key methods include:

i) Multi-Query Attention (MQA): Allows multiple query heads to share a single set of key-value pairs

ii) Grouped Query Attention (GQA): Groups query heads and reduces memory overhead by sharing key-value projections among groups

System-Level Approaches

Memory Management: Implementing effective memory management strategies ensures optimal allocation and deallocation of KV caches during inference. Proper memory handling prevents bottlenecks, maintaining smooth model operations. Key methods include:

i) Speculative KV Cache Offloading : Offloads primary KV Cache to the CPU, retaining critical components and speculatively reloading necessary parts to the GPU, saving memory space.

Scheduling: Efficient task scheduling can minimize latency and improve throughput in LLM applications. By organizing tasks effectively, the model can process information more swiftly, enhancing real-time performance.

10 Million Context Length LLM Inference with KVQuant

A recent study explores multiple KV cache optimization and compression techniques, identifies the patterns in KV cache activations that enable ultra-low precision quantization (compressing the data stored in the cache to very low bit levels, often below 4 bits). Some of the patterns observed are:

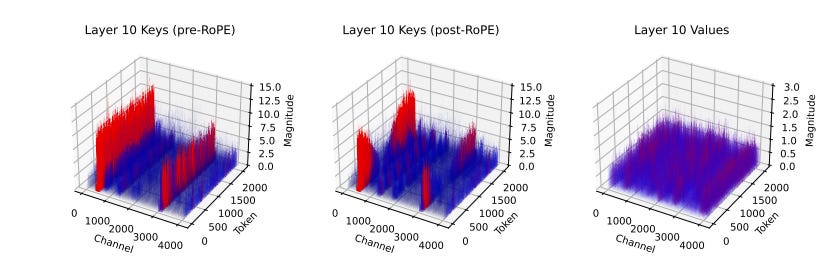

Before applying RoPE: The keys show unusual values (outliers) in certain channels (dimensions or aspects of the data).

After applying RoPE: The unusual values (outliers) in these channels become less predictable and consistent.

This inconsistency poses a challenge for low precision quantization.

Current methods for quantization, whether uniform or non-uniform, do not place the quantization markers in the most effective positions.

KVQuant address all of the above challenges and enables LLaMA-7B with a context length of up to 1 million on a single A100-80GB GPU and up to 10 million on an 8-GPU system. The methodology of KVQuant comprises several innovative techniques:

1. Per-Channel Key Quantization: Instead of applying uniform quantization across all dimensions, this approach quantizes the Key activations along specific channels. By aligning the quantization process more closely with the natural distribution of the data, it enhances the accuracy of the quantized representations.

2. Pre-RoPE Key Quantization: Rotary positional embeddings (RoPE) are used in transformers to incorporate positional information. Quantizing the Key activations before applying RoPE helps mitigate the impact of these embeddings on the quantization process, leading to more efficient and accurate quantization.

3. Non-Uniform KV Cache Quantization: Recognizing that different layers of the model have varying sensitivities to quantization, this technique employs per-layer sensitivity-weighted non-uniform data types. This tailored approach ensures that each layer's unique characteristics are considered, improving the overall quantization performance.

4. Per-Vector Dense-and-Sparse Quantization: Within each vector, certain elements (outliers) can disproportionately affect the quantization range. By isolating these outliers separately for each vector, this method minimizes skews in quantization ranges, leading to more precise quantization and reduced information loss.

KVQuant offers a smart way to reduce memory usage in large language models without sacrificing performance. On top of that, custom CUDA kernels speed up inference by 1.7× compared to standard fp16 operations. This paves way for exciting opportunities to optimize computation efficiency, explore quantization-aware training (QAT), push for even smaller KV caches, and extend the method to other architectures like Mixture of Experts (MoE) and diffusion models. This represents a substantial step towards making ultra-long-context LLMs more practical, with ample potential to push the boundaries even further.